Just_Super/E+ by way of Getty

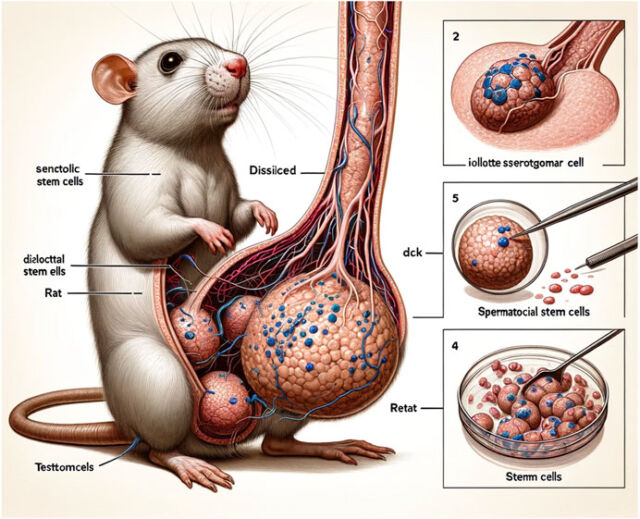

Final month, we witnessed the viral sensation of a number of egregiously unhealthy AI-generated figures printed in a peer-reviewed article in Frontiers, a good scientific journal. Scientists on social media expressed equal elements shock and mock on the photographs, one in every of which featured a rat with grotesquely massive and weird genitals.

As Ars Senior Well being Reporter Beth Mole reported, wanting nearer solely revealed extra flaws, together with the labels “dissilced,” “Stemm cells,” “iollotte sserotgomar,” and “dck.” Determine 2 was much less graphic however equally mangled, rife with nonsense textual content and baffling photographs. Ditto for Determine 3, a collage of small round photographs densely annotated with gibberish.

The paper has since been retracted, however that eye-popping rat penis picture will stay indelibly imprinted on our collective consciousness. The incident reinforces a rising concern that the rising use of AI will make printed scientific analysis much less reliable, even because it will increase productiveness. Whereas the proliferation of errors is a sound concern, particularly within the early days of AI instruments like ChatGPT, two researchers argue in a new perspective printed within the journal Nature that AI additionally poses potential long-term epistemic dangers to the apply of science.

Molly Crockett is a psychologist at Princeton College who routinely collaborates with researchers from different disciplines in her analysis into how individuals study and make choices in social conditions. Her co-author, Lisa Messeri, is an anthropologist at Yale College whose analysis focuses on science and expertise research (STS), analyzing the norms and penalties of scientific and technological communities as they forge new fields of data and invention—like AI.

The unique impetus for his or her new paper was a 2019 study published within the Proceedings of the Nationwide Academy of Sciences claiming that researchers might use machine studying to foretell the replicability of research primarily based solely on an evaluation of their texts. Crockett and Messeri co-wrote a letter to the editor disputing that declare, however shortly thereafter, a number of extra studies appeared, claiming that giant language fashions could replace people in psychological analysis. The pair realized this was a a lot larger situation and determined to work collectively on an in-depth evaluation of how scientists suggest to make use of AI instruments all through the educational pipeline.

They got here up with 4 classes of visions for AI in science. The primary is AI as Oracle, by which such instruments may help researchers search, consider, and summarize the huge scientific literature, in addition to generate novel hypotheses. The second is AI as Surrogate, by which AI instruments generate surrogate knowledge factors, even perhaps changing human topics. The third is AI as Quant. Within the age of huge knowledge, AI instruments can overcome the boundaries of human mind by analyzing huge and complicated datasets. Lastly, there may be AI as Arbiter, counting on such instruments to extra effectively consider the scientific advantage and replicability of submitted papers, in addition to assess funding proposals.

Every class brings plain advantages within the type of elevated productiveness—but in addition sure dangers. Crockett and Messeri notably warning towards three distinct “illusions of understanding” which will come up from over-reliance on AI instruments, which may exploit our cognitive limitations. As an example, a scientist could use an AI software to mannequin a given phenomenon and consider they, subsequently, perceive that phenomenon greater than they really do (an phantasm of explanatory depth). Or a crew may assume they’re exploring all testable hypotheses when they’re solely actually exploring these hypotheses which might be testable utilizing AI (an phantasm of exploratory breadth). Lastly, there may be the phantasm of objectivity: the assumption that AI instruments are really goal and do not need biases or a viewpoint, not like people.

The paper’s tagline is “producing extra whereas understanding much less,” and that’s the central message the pair hopes to convey. “The purpose of scientific information is to grasp the world and all of its complexity, range, and expansiveness,” Messeri informed Ars. “Our concern is that regardless that we is perhaps writing an increasing number of papers, as a result of they’re constrained by what AI can and may’t do, ultimately, we’re actually solely asking questions and producing plenty of papers which might be inside AI’s capabilities.”

Neither Crockett nor Messeri are against any use of AI instruments by scientists. “It is genuinely helpful in my analysis, and I count on to proceed utilizing it in my analysis,” Crockett informed Ars. Slightly, they take a extra agnostic strategy. “It isn’t for me and Molly to say, ‘That is what AI ought or ought to not be,'” Messeri mentioned. “As a substitute, we’re making observations of how AI is at present being positioned after which contemplating the realm of dialog we should have in regards to the related dangers.”

Ars spoke at size with Crockett and Messeri to study extra.